“Capturing 7 of 10 spots in the 10-Client Production list is more than a technical achievement – it’s proof that leading enterprises count on DDN to deliver the speed, scale, and reliability needed to turn data into competitive advantage”

Summary:

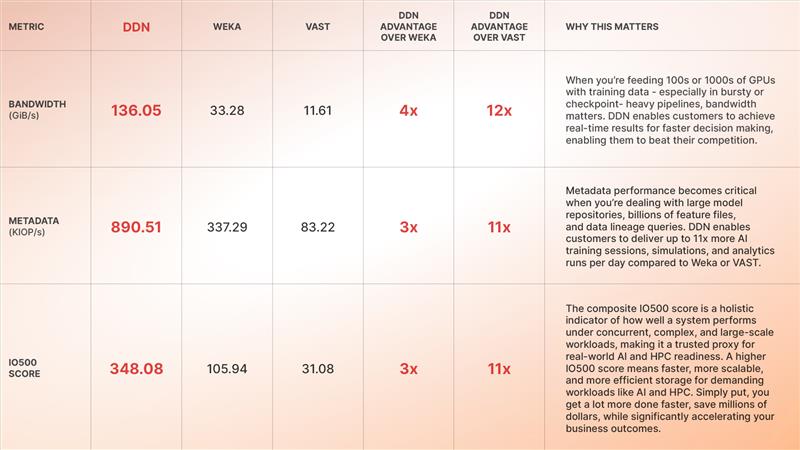

- DDN dominates the IO500 benchmark, outperforming VAST and Weka by up to 11x across real-world AI and HPC workloads.

- DDN delivers unmatched bandwidth, metadata handling, and efficiency – capturing 7 of the top 10 spots in the IO500 10-node production benchmark.

- Leading enterprises achieve breakthrough results with DDN, from 70% faster fraud detection to 80% shorter genomics runtimes.

If you rely on GPU’s, AI workloads or HPC simulations, you need the best technology to achieve the best outcome. Whether your mission is curing diseases, protecting financial markets, or transforming entire industries with generative AI, your outcomes hinge entirely on Data Center and Cloud infrastructure performance. IO500 confirms what top innovators already know: DDN isn’t just leading the pack – we’ve left it behind entirely.

IO500 Proves It: DDN Leads and Delivers 3x to 11x More Value Than Other Data Platform Technologies

IO500 isn’t just another benchmark – it’s the gold standard for assessing real-world storage performance for AI and HPC. It is vital to assess which technologies you should deploy and which vendors you should rely on. Unlike synthetic benchmarks that only test hypothetical and isolated conditions which rarely exist in the real world, IO500 evaluates real data center GPU and Compute infrastructure under realistic, demanding conditions with mixed workloads, complex I/O patterns, metadata-intensive operations, and concurrent tasks – exactly what your business outcome and scientific real-world AI and HPC environments face daily. This comprehensive test provides clear, credible insights into how infrastructure will perform when it matters most.

In the vital 10-node production category, which is where most customer use cases are found, and where real-world AI workloads run under intense pressure, DDN isn’t just ahead – it’s redefining what “ahead” means. And that translates into significant value for you. Value in innovating, creating better products and services, and pushing the envelope of what is possible.

Why This Matters for Your AI and HPC Workloads

AI and HPC cannot tolerate slowdowns—every minute of wasted compute time drains both budget and innovation momentum. Competitors offer theory; DDN delivers measurable, transformative results. Topping the IO500 rankings proves DDN delivers exceptional end-to-end performance that translates into real business benefits:

- Huge cost savings through hardware efficiency: A large U.S. hedge fund transitioned to DDN and saw a 3x improvement in algorithm development speeds, drastically reducing runtime costs and accelerating ROI, while also slashing fraud detection latency by 70%, saving millions in operational costs and significantly reducing financial risk.

- Estimated impact: Increasing throughput from, say, 10 backtests per day to 30–35 backtests—saving hundreds of thousands annually in GPU and engineer time.

- Simulation and data pipeline acceleration: DDN-powered genomics workflows—such as those at the Translational Genomics Research Institute (TGen)—have slashed pipeline times from 12 hours to under 2 hours, enabling researchers to iterate faster, increase productivity, and reduce compute costs by over 80%.

- Boosting Sovereign AI: A national defense program sustained data flows of 2TB/s during simultaneous model training and data ingestion, boosting efficiency, slashing operational costs, and drastically improving time-to-insight.

- Massively enhanced cluster efficiency: Supercomputing centers like CINECA (used for tsunami forecasting), Helmholtz Munich, and Bitdeer AI leverage DDN EXAScaler to maximize GPU utilization and drive unprecedented AI/HPC throughput —allowing customers to pack more workloads per cluster, lowering both CapEx and OpEx across the board.

These examples aren’t theoretical—they’re real, quantifiable impacts enabled exclusively by DDN

Here’s the Tech That Leaves Competitors Behind

DDN’s infrastructure isn’t retrofitted – it’s built specifically for AI and HPC workloads:

- Blistering Multi-Terabyte Bandwidth: Proven in massive-scale environments like NVIDIA’s Selene and CINECA’s supercomputing clusters, enabling real-time training, inference, and simulation.

- Unmatched Metadata Handling: Seamlessly handles billions of files and random I/O at scale, unlike competitors who stumble and delay your critical workflows.

- Native AI Parallelism: Zero-compromise parallel data access designed specifically for GPU-intensive workloads – no shim layers, no workarounds, pure performance.

- Intelligent Pipeline-Aware Tiering: Optimized caching ensures maximum GPU utilization, dramatically reducing latency and idle times.

- Bulletproof Enterprise Resilience: Built-in redundancy and robust data protection trusted by industry leaders including NVIDIA, HPE, Dell, Lenovo, and others.

Why Competitors Fall Short

Here’s why other providers struggle:

- WEKA requires ongoing manual tuning, leading to unpredictable performance, wasted time, and unnecessary operational expenses.

- VAST handles some read-heavy scenarios adequately but severely lags in crucial metadata operations and write-heavy AI workloads, hindering performance and limiting scalability.

- Hammerspace promotes orchestration but lacks significant deployments in high-pressure AI/HPC environments, as their single IO500 submission clearly demonstrates.

Your GPUs Deserve the Best—DDN

Every investment in AI infrastructure should maximize innovation and accelerate your goals, not bog you down in performance issues.

DDN ensures you achieve:

- Maximum GPU Efficiency

- Fastest Time from Data to Insight

- Proven Global Leadership and Scalability

Don’t settle for outdated infrastructure. Demand better. Demand DDN.

Explore the full IO500 results or contact our team today to see how powerful your AI infrastructure can truly become.