Success Story

NVIDIA and DDN Collaborate to Solve the World’s Greatest Challenges with End-to-End Supercomputing Solutions

KLA is a leader in process control using advanced inspection tools, metrology systems, and computational analytics, supporting chip manufacturing for a broad range of device types, including advanced logic and memory (3D NAND, DRAM, MRAM, etc.), power devices, RF communications devices, LEDs, photonics, MEMS, and more. Chip manufacturers use KLA’s array of products to help accelerate their development and product ramp cycle, and improve overall profitability in the chip manufacturing process.

To detect anomalies within the manufacturing process, inspection systems must be capable of identifying defects as small as 10nm and do so quickly while minimizing false positives. KLA chose DDN to architect a data management solution with the write speed necessary to keep up with enormous data processing demands and safeguard the efficiency and reliability of their manufacturing process.

The multi-step workflow involves capturing high-resolution images, performing image processing and segmentation, and analyzing data to identify and classify defects. The raw data generated by this process per wafer can be up to 300TB, which is analyzed against large data sets with the goal of completing a run on a wafer in under an hour. The data-intensive nature of this process necessitates a robust and scalable data infrastructure that can deliver fast write performance while delivering massive amounts of data to the computing systems and increasing data center efficiency.

“Virtual Inspector” mass storage device; stores inspection data during defect detection scans

Runs on DDN EXAScaler Lustre (ES400X2) platform with sustained write speeds of over 40 GB/s

6.5+ PB of scalable NVME flash storage

引用We have limited access to our system so we really needed something that was a single platform that would scale with ease.

Krishna MurikiHPC Systems Design & HPC Architect KLA

Jump Trading is a leading data and research-driven trading firm that focuses on algorithmic and high frequency trading.

Jump Trading prides itself on its unique practices that allow its quantitative analysts to function at maximum productivity. At Jump, quants are encouraged to operate independently and innovate using whatever methods they see fit.

The firm’s lack of central control removes the rigid bureaucracy of traditional firms and allows them to experiment and move at a much faster pace. However, this strategy comes at the price of some serious infrastructure challenges. To pull it off, Jump turned to DDN’s, equally unique, QLC technology to accelerate efficiency of compute resources.

While decentralizing the management of approaches their quantitative analysts take provides a strategic advantage in the firm’s ability to outpace competition, it makes enforcing efficient IO near impossible. As a result, to make this strategy work, Jump required a robust and highly efficient compute infrastructure capable of handling diverse workloads concurrently and with little to no processing latency.

DDN 400NVX2 QLC systems

Simple NVME-oF backend

引用DDN QLC systems are a really important part of that environment to get IO to our researchers as quickly as possible.

Alex DaviesChief Technology Officer Jump Trading

NVIDIA pioneered accelerated computing to tackle challenges no one else can solve. Their work in AI and digital twins is transforming the world’s largest industries and profoundly impacting society.

By consolidating the power of an entire data center into a single platform, NVIDIA has revolutionized how complex machine learning workflows and AI models are developed and deployed in an enterprise. This computing marvel also led to the creation of Selene, one of the world’s fastest computers in total performance, and commercially available system – the first of its kind, NVIDIA DGX SuperPOD™ Solution. NVIDIA seeks to reduce the complexity of increasingly diverse AI models, including conversational AI, recommender systems, computer vision workloads and autonomous vehicles.

NVIDIA and DDN Collaborate to Solve the World’s Greatest Challenges with End-to-End Supercomputing Solutions

To reduce the time-to-solution challenge, NVIDIA needs to be able to distribute large computational problems across hundreds of systems operating in parallel using an integrated set of compute, network and storage building blocks. This approach requires extremely fast storage performance to support intensive processing demands. High degrees of parallel access to small and large files are critical, along with seamless horizontal scaling to ensure incremental expansion.

More than 300 all-flash and hybrid storage appliances

Seamless deployment and optimization for on-premises and in the cloud

Simple appliance model with powerful 2U building block

引用The real differentiator is DDN. I never hesitate to recommend DDN. DDN is the de facto name for AI Storage in high performance environments.

Marc HamiltonVP, Solutions Architecture & Engineering NVIDIA

Thailand's First Sovereign AI Cloud Pioneers Digital Transformation with DDN

Siam AI is Thailand’s first and only NVIDIA Cloud Partner (NCP), founded with a bold mission: to propel the nation into a new era of digital transformation. By building world-class, sovereign AI infrastructure locally, Siam AI enables Thai researchers, students, healthcare providers, and businesses to harness the power of AI. With an eye on healthcare, smart cities, and large language model (LLM) development, Siam AI is laying the foundation for Thailand’s AI future—with DDN at the core of its infrastructure.

Deployed First 1,000-GPU Cluster Using DDN’s Intelligent Data Platform

Siam AI fully adopted DDN’s AI-optimized data infrastructure to manage performance-intensive AI workloads.

Future-Ready Architecture with H200 and Liquid Cooling

Siam AI is already planning next-gen deployments including H200 GPUs and liquid-cooled Blackwell architecture – all integrated with DDN for maximum scalability and efficiency.

Optimized Data Management for Complex AI and ML Workloads

DDN’s platform maximized read/write performance, GPU optimization, and checkpointing – crucial for LLMs, genome sequencing, and smart city AI initiatives.

引用We chose to work with DDN because DDN Data Intelligence platform significantly enhances our ability to manage complex AI and machine learning workloads by providing unparalleled reach and write speeds, maximizing GPU utilization, improving all the checkpoint efficiency. They have experience; they have the track record and the most important thing is that they are NVIDIA certified. So to work with them, I believe, is the best way for you to start your cluster.

Ratanaphon WongnapachantFounder & CEO | Siam AI

Powering AI at Scale with DDN

AI Cloud Service Provider

United Arab Emirates

Core42, a subsidiary of the Abu Dhabi-based G42 group, specializes in deploying and operating IT infrastructure for AI workloads. As a global provider of GPU-as-a-Service, Core42 is focused on making AI computing more accessible and affordable by leveraging the best available technologies.

To meet the growing enterprise demand for AI, Core42 has partnered with DDN to enhance performance, scalability, and cost efficiency in AI deployments worldwide.

Cost-Optimized AI Infrastructure

Future-Proof AI Factories

High-Performance, Scalable Data Intelligence Platform

引用I would say the starting point is performance and scalability. We are deploying AI factories that have to handle, by definition, a lot of data in and a lot of data out. We are running heavy compute on the data. We are in the constant search of storage solutions that can enable us both from a performance and a scalability standpoint. Obviously, we also request these solutions to be price competitive.

Edmondo OrlottiChief Growth Officer | Core42

Artificial Intelligence

Data Intelligence

xAI, led by CEO Elon Musk, is pioneering a new frontier in artificial intelligence with its colossal Memphis-based supercomputer, Colossus. Designed to power xAI’s next-generation Grok model, Colossus is set to become one of the world’s most advanced AI supercomputers, with a scale and speed previously unimaginable.

Partnering with DDN and NVIDIA, xAI deployed 100,000 NVIDIA GPUs, powered by DDN’s advanced data intelligence platform. This infrastructure enables Colossus to handle massive datasets, high-velocity AI model training, and real-time inference, positioning it as a leader in both scale and performance.

With a vision to push the boundaries of AI’s potential, xAI needed an infrastructure capable of supporting 200,000 NVIDIA GPUs to train and deploy Grok effectively. To keep up with the extreme computational requirements, xAI required a solution that could:

DDN’s collaboration with NVIDIA provided xAI with an integrated, high-efficiency data platform to meet these ambitious goals. The DDN Infinia and EXAScaler solutions enabled Colossus to scale up AI operations seamlessly. This architecture not only accelerated training speeds but also maintained optimal efficiency, even with an immense computational load.

Key Features of the Solution:

Data Center & Cloud Optimization: DDN solutions streamlined data pathways, reducing overhead by 75%, minimizing costs, and optimizing compute and network performance.

AI Framework/LLM Acceleration: DDN’s platform accelerated large language model (LLM) performance up to 10x, shortening time-to-market for AI applications and lowering GPU consumption.

Data Orchestration and Movement Optimization: DDN ensured smooth data flow across edge, data center, and cloud environments, cutting latency and improving scalability.

The collaboration between xAI, DDN, and NVIDIA transformed Colossus into an AI powerhouse capable of groundbreaking performance in natural language processing, AI model training, and real-time AI inference:

引用Colossus is the most powerful AI training system in the world. Moreover, it will double in size to 200k (50k H200s) in a few months. Excellent work by the team, NVIDIA and our many partners/suppliers.

Elon MuskCEO, xAI

引用Complementing the power of 100,000 NVIDIA Hopper GPUs connected via the NVIDIA Spectrum-X Ethernet platform, DDN’s cutting-edge data solutions provide xAI with the tools and infrastructure needed to drive AI development at exceptional scale and efficiency, helping push the limits of what’s possible in AI.

Dion HarrisDirector of accelerated data center product solutions, NVIDIA

Bitdeer AI Cloud and DDN: Powering the Future of AI Development

Bitdeer AI is at the forefront of AI computing, providing high-performance AI cloud solutions to enterprises, startups, research labs, and businesses exploring AI. Their mission is to democratize AI computing, making it accessible beyond large tech companies. To achieve this, Bitdeer AI needed a robust storage solution capable of handling large-scale AI model training, inference, and workflow optimization. By partnering with DDN, Bitdeer AI Cloud has been able to scale efficiently, optimize performance, and reduce infrastructure bottlenecks, ultimately delivering an enhanced AI experience to its customers.

High-Performance Storage with Low Latency

DDN’s data intelligence platform provides the high throughput and low latency required for large-scale AI model training.

End-to-End AI Workflow Optimization

The combination of DDN’s high-bandwidth storage and parallel file system compatibility enhances AI model training, reduces bottlenecks, and improves efficiency.

Seamless Integration with Bitdeer AI Cloud

DDN’s robust infrastructure integrates with Bitdeer AI Cloud to optimize performance and enable scalable AI solutions.

Bitdeer AI provides a wide range of AI solutions across industries, accelerating business outcomes and enabling new capabilities. Their AI training and inference platform allows users to set up a development environment with Jupyter Notebook and select single or multi-GPU resources to start training and inference with a single click. Additionally, their workflow platform simplifies AI development with modular components such as input, processing, and output modules, allowing businesses to build AI-driven applications efficiently.

Examples of how Bitdeer AI solutions are used:

By leveraging DDN’s industry-leading Data Intelligence platform, Bitdeer AI is transforming the future of AI computing, enabling businesses worldwide to innovate faster and more efficiently.

引用DDN Data Intelligence platform provides scalability and high-performance storage, keeping up with the massive AI workloads. The combination of low-latency access and high bandwidth and the parallel file system compatibilities enable our customers to have faster training of their models and reduce bottlenecks and optimize their AI workflow.

Louis XuHead of Bitdeer AI Cloud

The leading pioneer in biology since 1896, Roche develops innovative medicines, treatments and diagnostics that revolutionize healthcare.

Throughout a 125-year history, Roche has grown into one of the world’s largest biotech companies, a leading provider of in-vitro diagnostics, and a global supplier of transformative innovative solutions across major disease areas.

Roche’s instruments are getting faster with higher resolution at lower granularity, and they understood they were facing a parallel data problem. They chose NVIDIA GPUs as the parallel processing engine complemented by DDN A³I storage, allowing the information to be generated quickly, processed rapidly, and produced faster results more efficiently – enabling Roche to unlock early cancer detection when it matters most.

Being 100% cloud based worked fine early in their development, but after adding additional sensors and generating more data – up to 2PB every 24 hours – it became too cumbersome and slow to continue with that strategy. Limitations of being fully cloud-based required users to compromise their analysis and discard 99% of their data. Turnaround time for critical experiments was over two weeks.

An end-to-end solution backed by hybrid NVME and dense disk storage for Nanopore Sequencing

DDN A³I with NVIDIA H100 GPUs for a fast system in a small footprint

Simple expansion with standard building blocks Roche

引用The solution DDN had, for what we needed, just made sense. We needed a small footprint and something very fast so we could process the data as quickly as possible – time is money.

Chuck SeberinoDirector of Accelerated Computing Roche Sequencing Solutions

Accelerating Scientific Discovery with High-Performance Data Intelligence

Scripps Research, founded in 1924, is one of the leading nonprofit research institutions in the world, specializing in biomedical sciences. The Ward Lab at Scripps Research focuses on cryo-electron microscopy (cryo-EM) and structural biology, studying virology and immunology to advance vaccine and therapeutic developments. However, with the rapid expansion of cryo-EM capabilities, the lab faced significant challenges in managing the exponential growth of data. By implementing a high-performance data intelligence platform, Scripps Research optimized data storage, accessibility, and processing, leading to faster research outcomes and groundbreaking discoveries in vaccine development.

Scalable High-Performance Data Platform

A robust infrastructure was built to handle real-time data ingestion, storage, and processing, enabling 24/7 research activities.

Seamless Access and Uptime

The platform provided high availability and ensured researchers could interact with their data effortlessly from anywhere.

Accelerated AI and HPC Integration

Advanced AI and GPU computing streamlined protein modeling, significantly reducing the time required for structural analysis and drug discovery.

引用Having a high-performance data intelligence platform at your disposal allows you to spend more time on the research that you’re interested in. Our scientists are able to come here, log in, collect their data, process their data, and not have to worry about where it’s stored, whether it’s still going to be there the next week, or if they are running out of space.

Charles BowmanStaff Scientist, Ward Lab, Scripps Research

Protecting The People With HPC-Enabled Urgent Computing

CINECA is one of the largest and most advanced computing centers in Italy, specializing in high-performance computing (HPC), data management, and scientific visualization.

CINECA has been at the forefront of high-performance computing in Italy since its founding in 1969. Over the years, it has continually expanded its infrastructure and expertise, becoming a pivotal hub for advanced computational research in Europe.

One of CINECA’s most critical tasks is what they call “urgent computing”, or the need to compute something in a small fixed time window. These tasks generally encompass life-saving endeavors like natural disaster prediction and epidemic modeling. Computing these tasks within a small enough window, however, presents an enormous infrastructure challenge that necessitates paramount speed and efficiency. To ensure their newest cluster, Leonardo, would be able to take on these tasks at the speed required, CINECA turned to DDN’s EXAScaler software.

To highlight an example, tsunami forecasting is an extremely time sensitive task that involves modeling impact at coastal locations based on real-time information once a tsunami is detected. Previously, CINECA and most other computing centers had to wait 20 minutes from the time of detection until their forecast was complete. Now, with DDN EXAScaler, CINECA can complete a forecast within 5 minutes, providing a potentially life-saving increase in time needed for evacuations.

DDN EXAScaler software

DDN ES400NVX2 All Flash Tier systems

DDN ES7990X + 62 SS9012 + 4 ES400NVX HDD Tier systems

引用This kind of power needs an extremely well-optimized storage environment to gain maximum efficiency. We chose DDN because of its ability to accelerate all stages of the AI and HPC workloads lifecycle.

Mirko CestariHPC and Cloud Technology Coordinator, CINECA

The National Center for Supercomputing Applications (NCSA) at the University of Illinois is an R1 research institution that provides high performance compute, cloud compute, research-focused data platform, and expert support to thousands of scientists and researchers across the United States.

The NCSA currently operates 9 distinct compute environments, with many users having access to multiple systems. In their previous data environment, however, users had to transfer data between these systems manually, taking up precious time and system resources. To remedy this and unify their computing environments under one data environment, the NCSA turned to DDN’s expertise to help create Taiga, a global parallel file system designed to let users focus on their data, rather than managing where it is.

The NCSA operates nine distinct compute environments, each with its own specialized pipelines, making data movement cumbersome for researchers. Their

users needed a unified data platform to seamlessly access and store data across multiple compute environments, while ensuring scalability and performance for efficient processing and adaptability.

Taiga, a global parallel file system designed to span across multiple compute environments.

Three 400NVX/400NVX2 units and 1 18KX unit (Combined 25PB of NVME and HDD capacity)

DDN EXAScaler software

Nvidia HDR Infiniband networking

引用DDN’s Exascaler platform provides us with the performance and flexibility we need to give thousands of researchers a central, performant namespaces for all their active data needs.

JD MaloneyLead HPC Engineer, NCSA

Empowering Innovation: Purdue’s Data-Intensive Research Landscape

Purdue University Rosen Center for Advanced Computing is at the forefront of data-intensive research, providing support in fields such as aerospace, agriculture, life science, and semiconductors. Since 2005, Purdue has relied on upwards of 50 petabytes of DDN’s data platform to facilitate complex endeavors such as advancements in nanotechnology and groundbreaking atomic catalyst discoveries.

Recently, Purdue established the Purdue Computes Initiative, which aims to grow GPU utilization across campus in response to a rapidly increasing student interest in computing and artificial intelligence (AI) related fields. Amongst this growing interest, particularly in AI, Purdue recognized the importance of maintaining a capable data environment both in capacity and transfer speed. To ensure their students’ and faculty’s success in paving the way for the future of AI, Purdue turned to DDN to architect a data platform upgrade for their Gilbreth cluster.

Purdue faced a significant challenge in managing the escalating volume and diversity of data being processed and accessed on their Gautschi cluster. Maintaining maximum data transfer efficiency and IOPS performance was of primary concern to ensure that, as data libraries increase in size and quantity, students and faculty can perform operations and iterate at the speed of innovation.

Gautschi is an NVIDIA H100 SuperPOD used for modeling simulation + AI workloads

3 DDN EXAScaler ES400NVX2-SE nodes

Powering 9 PB raw QLC flash capacity

引用This investment in resources will ensure that Purdue faculty have access to cutting-edge resources to ensure their competitiveness, and they can focus on scientific outcomes instead of technology problems.

Preston SmithExecutive Director, Purdue Rosen Center for Advanced Computing

The University of Florida has a mission to ensure that every student across every discipline has the knowledge of what AI is and how to use it in their area of expertise upon graduation. From religion to agriculture, and liberal arts to engineering, every student will have worked with the AI curriculum and will be able to apply that knowledge to advance the field they are studying in.

To support this goal, the university required an upgrade to their existing compute infrastructure, HiPerGator, that would propel it among the ranks of the most powerful AI supercomputers in the world.

UF faced several challenges on its mission to create a pinnacle computing environment to meet its ambitious goals. As research data and computational needs expanded rapidly, UF’s existing infrastructure struggled to keep pace with the growing demands of its many users across departments. Additionally, because the cluster is shared between departments for various computational tasks, ensuring jobs could be completed in a timely manner would be essential to maintaining a swift research pace and overall beneficial experience for the end users.

NVIDIA DGX SuperPOD with DDN A³I HiPerGator 3.0, which would become recognized as the world’s fastest AI supercomputer in academia

140 NVIDIA DGX A100 systems (1120 GPUs), 4PB DDN AI400X all-flash storage

Mellanox 200GB/s InfiniBand networking

引用We have a history of success with DDN storage systems powering our HPC computing and anticipate similar high productivity for our AI workloads.

Erik DeumensDirector of Information Technology University of Florida

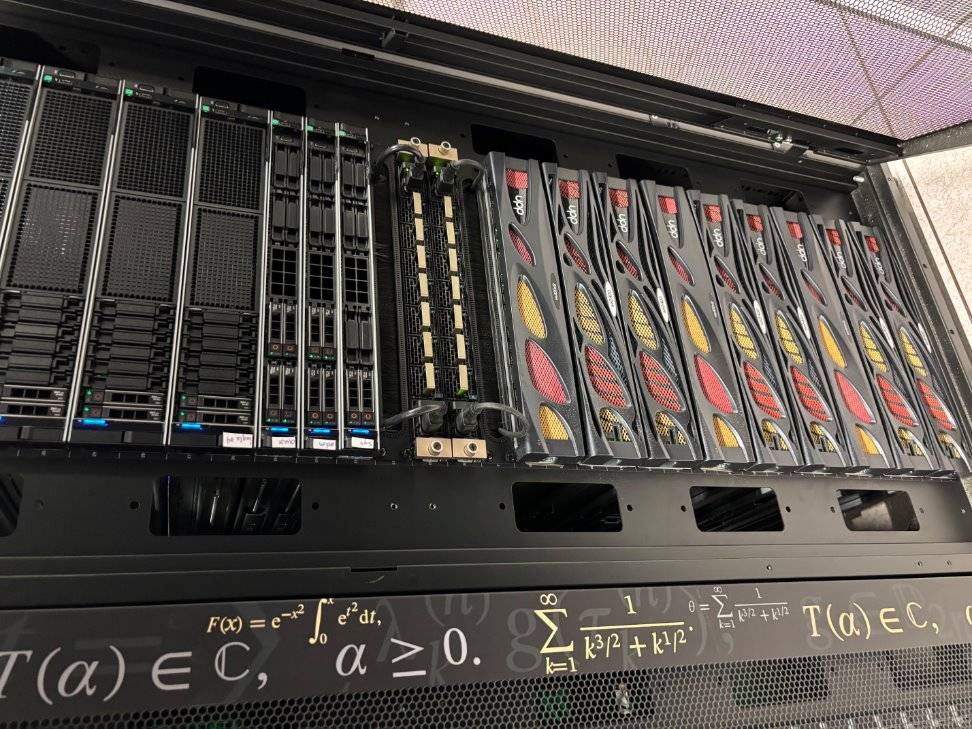

BioHPC, within Lyda Hill Department of Bioinformatics, supports research at the University of Texas Southwestern to give researchers easy access to the sophisticated technology needed for advancements in molecular biology.

The research being done at UTSouthwestern will eventually lead to better treatments of human health – everything from cancer and neurodegenerative diseases to viral infections. With a powerful Cryo-Electron Microscope researchers are able to quickly create three dimensional structures that can be utilized by machine learning to recognize patterns and lead to faster discovery. The BioHPC department has orchestrated fast storage, fast compute and fast networking to provide researchers with an accessible infrastructure that is as easy to use as an online share drive.

The BioHPC group brings together a large group of mathematicians and computer scientists who work on various aspects of data science and pattern recognition in biomedical data. These pattern recognition problems are heavily data-driven and require very large data sets, making it critical to have the data storage and data processing completely integrated.

A 150PB integrated storage system

Multiple 200Gb infiniband and 40Gb ethernet links

82 Object Storage Targets

引用For an academic medical center, it’s definitely a privilege to have this level of technology development, fully integrated with the biomedical research.

Professor Gaudenz DanuserProfessor & Chair, Lyda Hill Dept. of Bioinformatics University of Texas Southwestern

As part of the Helmholtz Association, Germany’s largest research organization, Helmholtz Munich is one of 19 research centers that develops solutions and technologies for the world of tomorrow.

The research integrates Artificial Intelligence (AI) methodologies and spans multiple data-intensive computational applications. Some of these applications include decoding plant genomes, tissue sample testing or creature and epidemiology cohort studies. The knowledge that the centers gain from this research forms the foundation of tomorrow’s medicine and delivers concrete benefits to society and improvements to human health.

Within Helmholtz Munich there are multiple institutes that focus on different areas of research that require the need to move, store, and process large amounts of data. The storage infrastructure was hindering compute performance as well as hardware reliability. While these systems may have been appropriate when initially deployed for modest workloads, they couldn’t scale to meet the needs of more modern and sophisticated approaches to analyzing research data.

A centralized DDN storage system using a global EXAScaler Lustre file system

Four fully populated systems that span a global namespace

SFA NVMe ES400NVX system with GPU integration

引用Because we pre-emptively set up high-performance DDN systems, Helmholtz Munich was well-equipped to manage and quickly access the massive data sets generated by this new wave of AI applications.

Dr Alf WachsmannHead of DigIT Infrastructure & Scientific Computing Helmholtz Munich

C-DAC is the Government of India’s Premier R&D organization for carrying out R&D in IT, electronics and associated areas.

Since 1988, C-DAC has led India’s efforts in strengthening national technological capabilities and responding to global developments in the field. As a dedicated institution for high-end R&D, C-DAC has been at the forefront of the IT revolution, consistently innovating on emerging technologies.

With the latest advancements in advanced neural networks, C-DAC sought to expand its supercomputer portfolio with its largest cluster to date, PARAM Siddhi, featuring the industry’s best components to ensure India solidifies its position as a leader in AI.

C-DAC was tasked with procuring a cluster powerful enough to serve as India’s AI and HPC specific cloud computing infrastructure. The cluster needed to be shared across multiple user bases including academia, R&D institutes and start-ups, and to do so with little to no processing latency. The cluster would also need to locally store India’s massive data sets from areas like healthcare and agriculture in a high-throughput and efficient data platform.

NVIDIA DGX SuperPod with DDN A³I data platform – codename PARRAM Siddhi – known as fastest supercomputer in India

42 NVIDIA DGX A100 systems (340 GPUs)

10.5PB of DDN A³I data platform

EXAScaler Software with 250 GB/s read performance

NVIDIA Mellanox HDR InfiniBand network

引用We had to establish data centers in 15 different locations within the country. For all of those we needed storage, and we chose DDN for this requirement.

Sanjay WandhekarSenior Director C-DAC